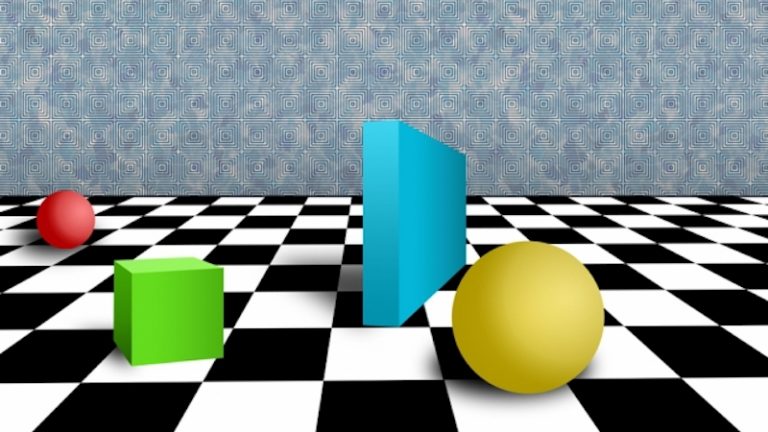

Researchers from MIT have designed ADEPT – a model that understands intuitively the relationships between objects in our world and how they should behave. Just like an infant, the model is ‘surprised’ when objects behave unexpectedly. This research could help build smarter AI and could also advance our knowledge of infant cognition.

ADEPT stands for Approximate Derendering Extended Physics Tracking.

ADEPT: Building infant cognition into AI agents

MIT’s model is tracking objects that move around a video scene and predicts their destination, based on their underlying physics. While observing the objects frame by frame, the model outputs a directly proportional ‘surprise’ signal. The bigger the difference between an object’s behavior and the model’s predictions (for example the object is vanishing or teleporting) — the bigger the ‘surprise’.

“By the time infants are 3 months old, they have some notion that objects don’t wink in and out of existence, and can’t move through each other or teleport.”

Kevin A. Smith, Research scientist*

The model’s results (surprise levels) are comparable to human results (subjects who had watched the same videos).

Authors of the ADEPT Model

- Kevin A. Smith*, Research scientist in the Department of Brain and Cognitive Sciences (BCS), member of the Center for Brains, Minds, and Machines (CBMM)

- Lingjie Mei*, Undergraduate in the Department of Electrical Engineering and Computer Science

- Shunyu Yao*, BCS research scientist

- Jiajun Wu, PhD ’19

- Elizabeth Spelke, CBMM investigator

- Joshua B. Tenenbaum, Professor of computational cognitive science, researcher in CBMM, BCS, and the Computer Science and Artificial Intelligence Laboratory (CSAIL)

- Tomer D. Ullman, CBMM investigator , PhD ’15

Article source & further reading:

MIT news: Helping machines perceive some laws of physics

CSAIL Modeling Expectation Violation in Intuitive Physics with Coarse Probabilistic Object Representations/